Machine Learning Fundamentals for eCommerce Entrepreneurs in the US

Warsaw. By Isaías Blanco- The following Machine Learning fundamentals resume essential concepts, approximations and critical ideas to understand how the data has transformed Search Marketing from an educational perspective.

Machine Learning tries to make predictions to facilitate strategic decisions based on algorithms and statistical models that analyze patterns included in datasets.

A machine learning model is a solution used to predict possible actions/reactions in a given data set. It is built by an algorithm that uses structured data to learn patterns and relationships included in the data set.

Furthermore, Machine learning models are mathematical tools that allow Data Scientists to reveal synthetic representations of external events to understand better and predict future behavior.

Each model is created from predictive algorithms, which are trained using a given data set, and they enhance the accuracy of the results based on three components: representation, evaluation, and optimization.

Once detailed how models compone the core of Machine Learning, it is worth mentioning some basic concepts:

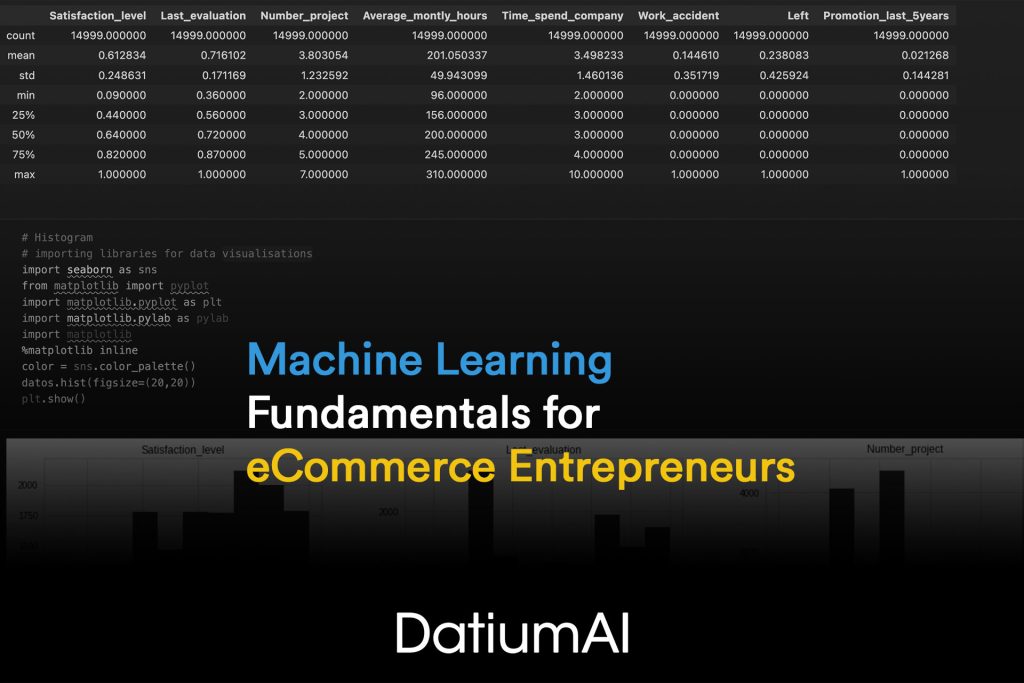

Data: Represents the rough information used to train and test machine learning models. The Data can be structured (tables) or unstructured (text, videos, sounds or images).

Features: Describes the attributes or characteristics of data used as inputs to build machine learning models. The Features can be numerical (age or height) or categorical (gender or color).

Labels: Shows the outputs or outcomes of data used to supervise each machine learning model. The Labels can be continuous (price or temperature) or discrete (yes/no or positive/negative).

Loss: Introduces the measure of how well a machine learning model fits the data. The Loss is commonly calculated by comparing a model’s predictions with the existing data labels.

Learning rate: This represents the parameter that controls how much a machine learning model changes its weights during the training period. A high learning rate can lead to faster convergence and instability, while a low learning rate can result in slower conjunction and accuracy.

But before continuing, it is fundamental to denote that Machine Learning Models can be categorized into different types depending on the task and data structure they are organizing.

Classification: Predict an object’s type or class within a finite number of options. A classification model can identify whether an email is spam or an image contains a white or black cat.

Regression: Predict a constant value for an output variable. A regression model can estimate the price of a vacation resort based on the business features, city temperature, the season of the year or historical price data.

Clustering: Reunite similar objects together based on their features. A clustering model can segment resort customers based on travel preferences. Also, it can be used to find topics in a collection of documents.

Dimensionality Reduction: Reduce the number of dataset features or dimensions while preserving essential information. A dimensionality reduction model can compress images without losing quality. It also helps to visualize high-dimensional data in reduced sizes.

Deep Learning: Use multiple layers of artificial neural networks to learn complex patterns and functions from data. A deep learning model recognizes faces in images or generates natural language text.

Now that we have a good level of the fundamental concepts involving a Model, it is time to mention that a Model can be supervised and no-supervised (unsupervised). But What does it mean?

Supervised:

- It uses labeled data to assign each input a corresponding output or target value.

- Learn how to represent (mapping) inputs to outcomes (outputs) by minimizing a loss function that calculates the potential error between predictions and labels.

- It can be used for assignments such as classification or regressions.

- Predicts outputs from inputs

- Fits an answer

Unsupervised:

- Do not assign an output or target value to each input because it uses unlabeled data.

- Discovers data patterns, features, or structures without human guidance or manipulation.

- They are used for Clustering or dimensionality reduction.

- It finds patterns or structures in the data

- It uses a decision tree to detect patterns

- What products are connected?

- Who are similar customers?

- Can I detect the rules?

- Are there outliers?

Testing and validation:

Validation is the most challenging phase during a Machine Learning Model development because it will define the accuracy and the trust level of the results after supervised/unsupervised protocols.

So, ML specialists and Data Scientists coincide in that the best way to validate a model results from a data split into two sets. One will be a training set, and the other the test set.

The main goal of the validation is to consider how well it can predict the class labels of new data that it has not seen before in the pre-existing data set.

Classification model validation common steps:

- Split data into training and test sets using the ratio 70:30 or 80:202

- Training sets will be used to fit the model.

- The test set will evaluate the performance of unseen data.

- Apply your model to the test set and compare the predicted and actual labels.

- To measure the accuracy and error of the model, the following metrics: confusion matrix, precision, recall, F1-score, ROC curve, and AUC score.

- Check for overfitting or underfitting.

- Overfitting: the model performs well on the training set but poorly on the test set, indicating that it has learned too much from the specific features of the training data and cannot generalize well to new data.

- Underfitting the model performs poorly on both sets, indicating that it has not learned enough from the data and cannot capture its complexity.

As a complementary steep, Cross-validation, regularization, feature selection or dimensionality reduction are techniques to avoid overfitting or Underfitting

But to better validate the entire Model, Machine Learning specialists recommend fine-tuning the model’s parameters or hyperparameters if needed.

- Parameters are values that your model learns during training, such as weights or biases.

- Hyperparameters are values set before training, such as the learning rate or the number of iterations.